Golden Gate: Setup Bi-Directional Replication in Multi-tenant Environment (PDBs)

Intro

Golden Gate replication solution allows you to capture, replicate, filter, transform, and deliver real-time transactional data across Oracle or heterogeneous environments.

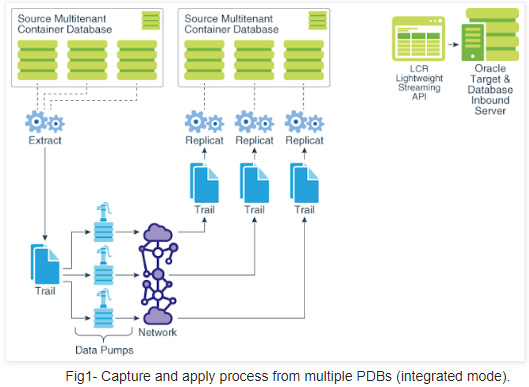

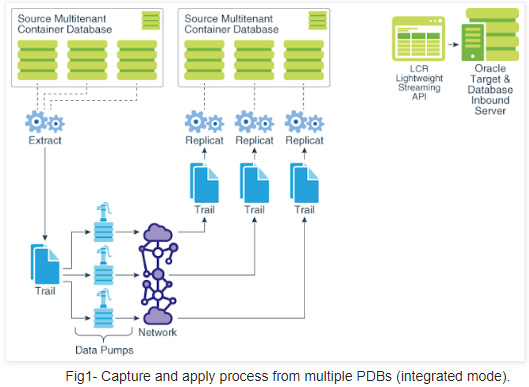

Golden Gate 12c features along with Oracle multi-tenant architecture introduced some changes to the way replication works (see Fig-1). Another interesting fact is that few of Oracle Streams features have now found their way into GoldenGate.

This article describes how to configure Oracle GoldenGate 12c in order to perform a Bi-directional replication between Oracle source and target Container Database 12c. We will be using Integrated Replicat and Integrated Extract since Integrated mode extraction is the only supported method for Multi-tenant databases.

Multitenant specificity

Here are some major changes regarding GoldenGate coupled with Oracle Database 12c multitenant architecture:

- One Extract process can be configured to capture changes from multiple Pluggable Databases (same redo stream is shared by all PDBs ).

- Replicat process can only connect and apply to one Pluggable Database (PDB).

- The extract must operate in integrated capture mode (only supported mode for multi-tenant DBs), a log mining server is involved and this is only accessible from the root container (CDB$ROOT).

- We have to connect as a common user to attach to the logminer on the server.

What will be covered ?

In this post we will focus on the following main steps:

I. GoldenGate Environment Configuration

II. Initial load

III. Apply change data

IV. Bi-directional replication

I. GoldenGate Environment configuration

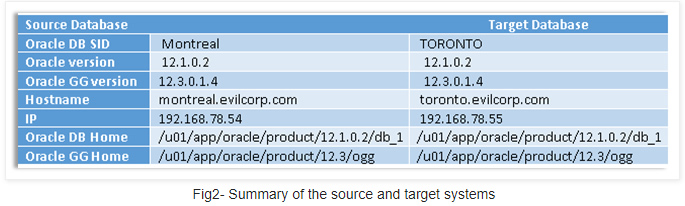

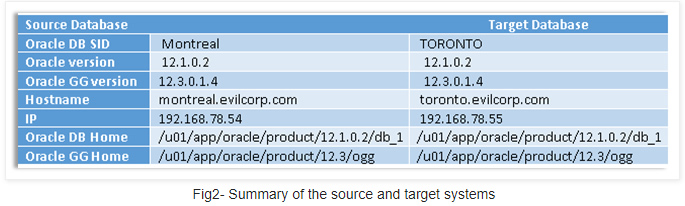

My lab is based on the below source and target systems. Both contain similar Pluggable database called ”PDB”

A- Installation

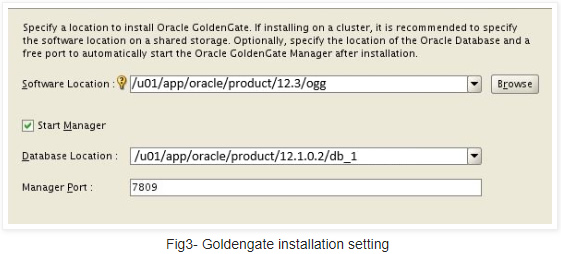

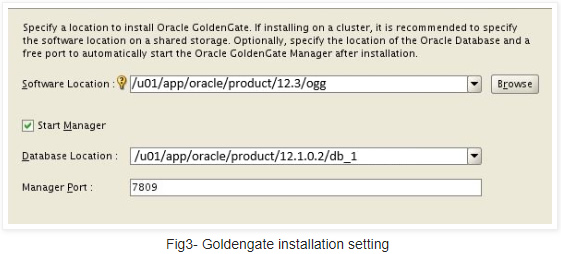

GoldenGate installation is pretty straightforward. Make sure you specify the right GGate and database homes

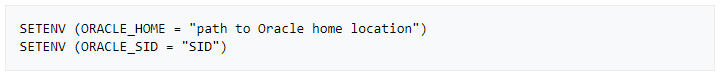

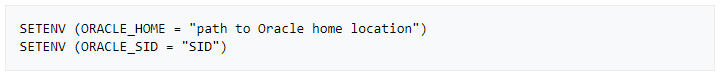

Note: If there is only one GoldenGate installation for multiple DBs you will have to set those variables in the extract and replicat processes directly as below :

B-Preparation

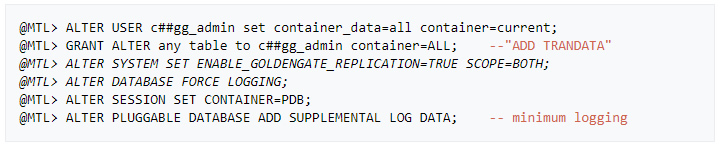

An extract process for a multitenant database must be created at the root container level with a “common” database user and must be defined to run in the “integrated” capture mode. Replicats, on the other hand, must be created at the pluggable database level and can be defined to run in either the “classic” or “integrated” modes.

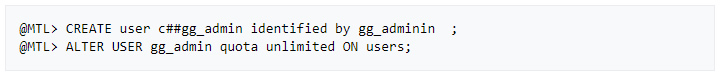

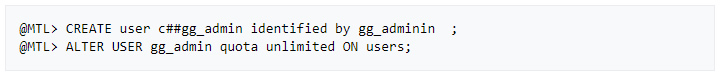

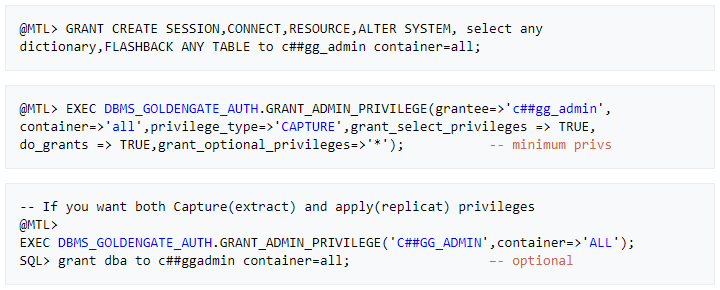

1. On the source system (MONTREAL)

» Create source OGG admin User

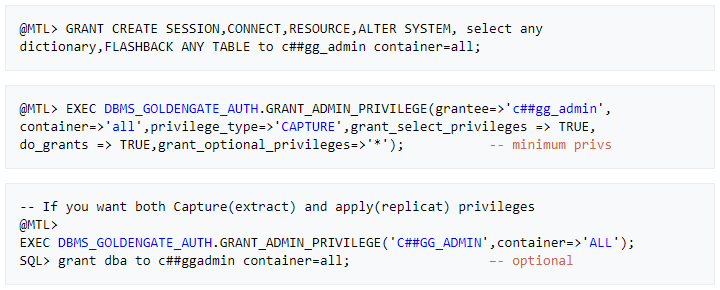

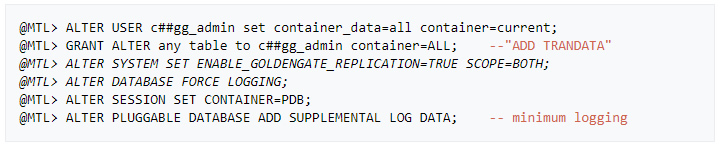

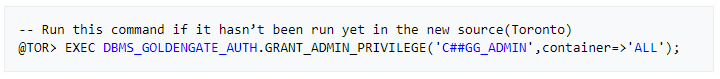

– Assign the correct source privileges to the GoldenGate admin user as shown below

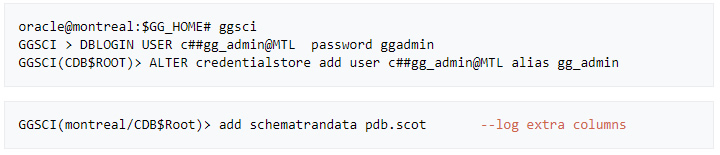

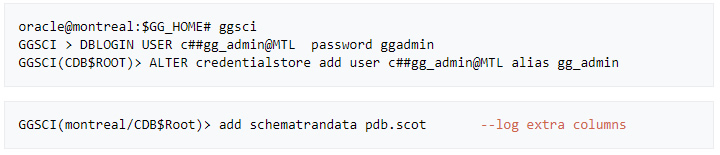

» Add supplemental log data for update operations

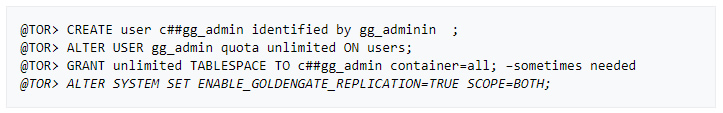

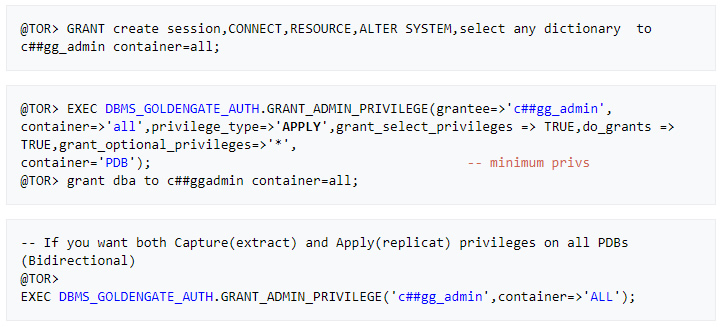

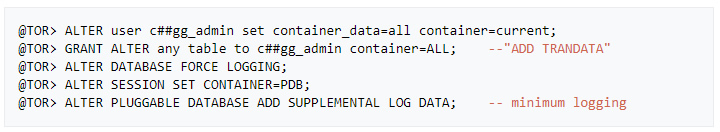

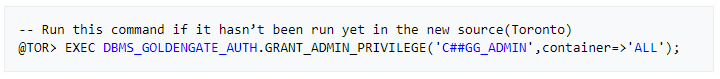

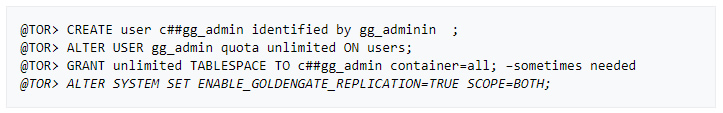

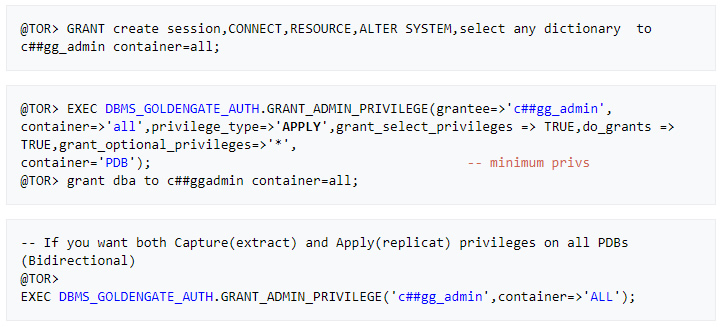

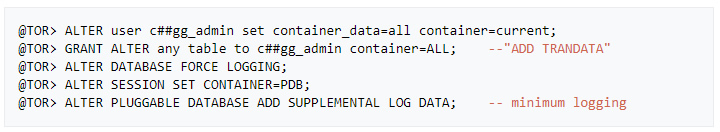

2. On the TARGET system (TORONTO)

» Create target OGG admin User

– Assign the right target privileges to the Golden Gate admin user as shown below

II. Initial Load

This is basically the first stage where the extract captures data directly from source tables and without using trail files.

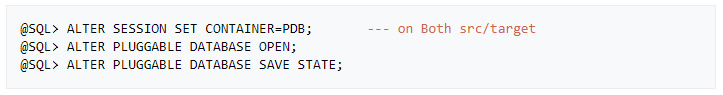

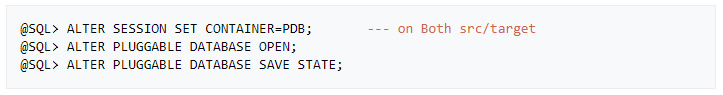

Important: First make sure that both source and target PDB open state is permanent before setting up any replication

A- Prepare the target Tables

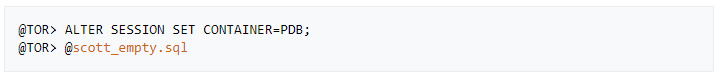

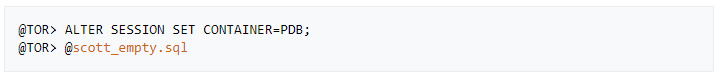

» (Re)Create an empty sample schema “SCOTT” on the target PDB (download script here)

Note: You can run this script @scott.sql in the source PDB if SCOTT schema is not yet created

B- Configuration

1. On the source system (MONTREAL)

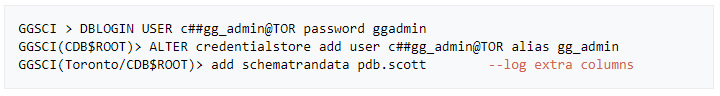

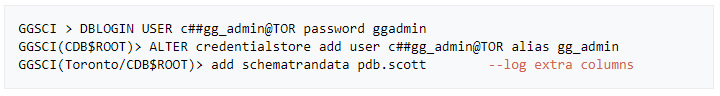

» Configure OGG User alias and schema logging

Note: This lab assumes that tns aliasses have been created for both source and target database and PDBs

@MTL= Montreal , @TOR=toronto , @PDB= PDB in each server respectively

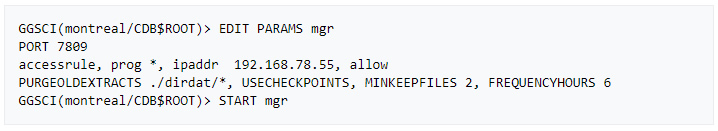

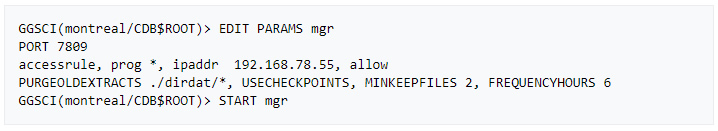

» Allow incoming access to the Manager process

» Create an initial Extract parameter file

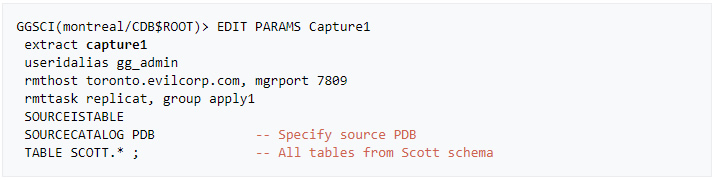

» Add and register the initial extract with the container database

2. On the TARGET system (TORONTO)

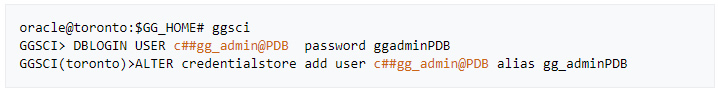

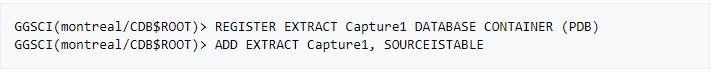

» Add OGG User alias PDB level (can be run from root CDB too)

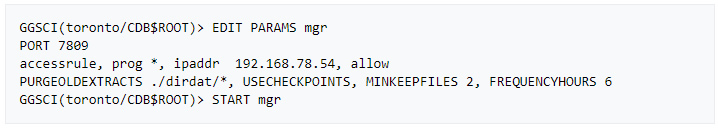

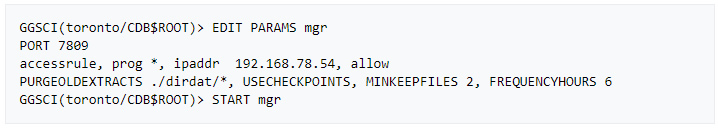

» Allow incoming access to the Manager process

» Create a Replicat parameter file

» Add the integrated replicat

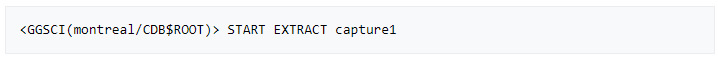

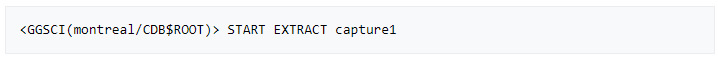

» Start the extract process on the source system

This will allow the target scott schema to be synchronized in a few seconds.

Note: In case datatypes weren’t similar, a definition file is required to handle the mapping using DEFGEN utility

III. Apply Change Data

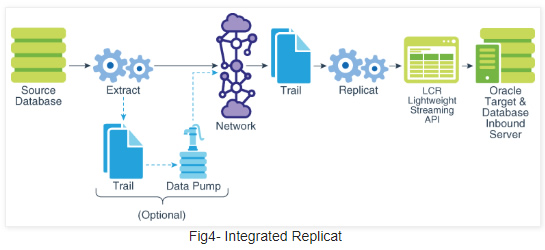

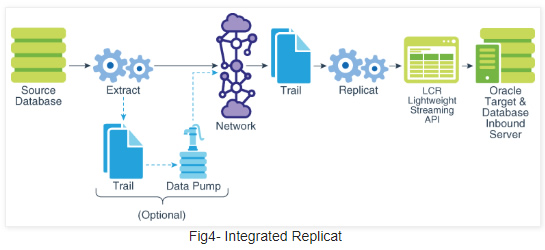

The extract process will pull data from the PDB and send it across the network to the target system. Once data is written to target trail files, the integrated replicat will convert it to LCRs which will be applied to target PDB by the inbound server.

A- Configuration

1. On the source system (MONTREAL)

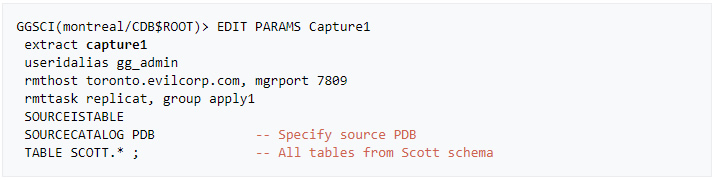

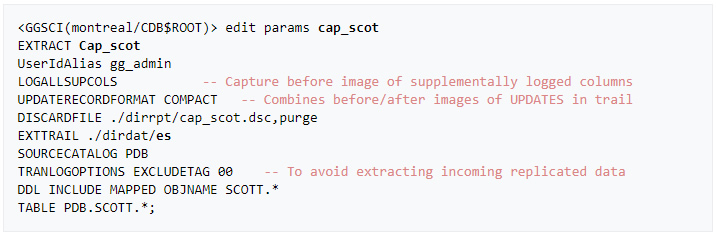

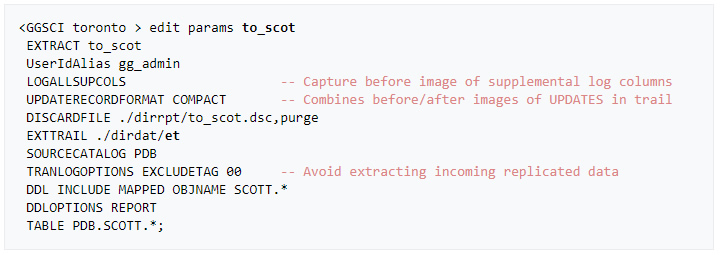

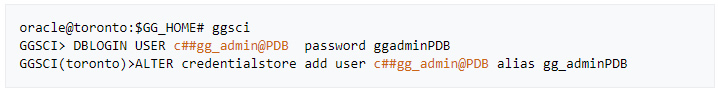

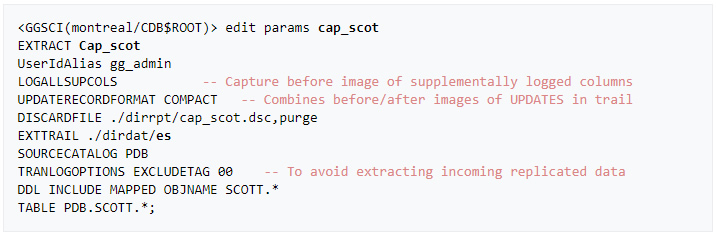

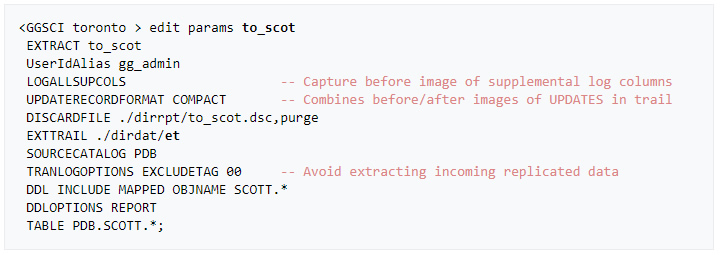

» Create an Integrated Primary extract

Note: TRANLOGOPTIONS is only required for bidirectional replication. I added it in purpose here.

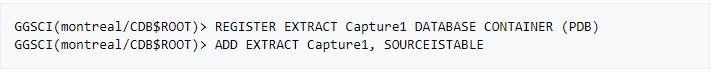

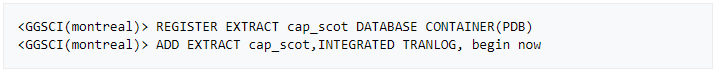

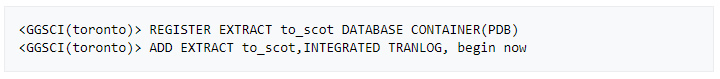

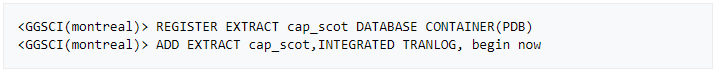

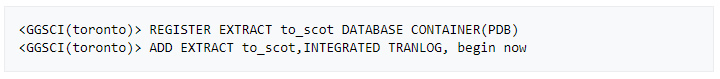

» Add and register the integrated extract with the container database

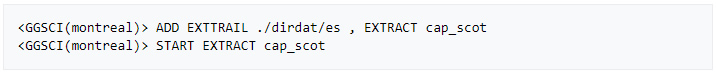

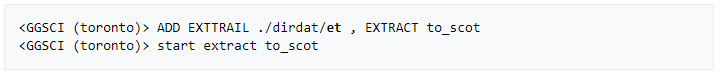

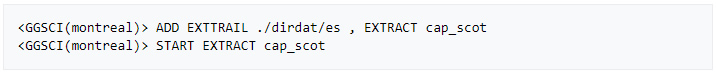

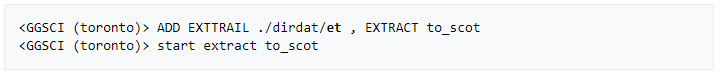

» Create a trail for the Extract group and start it

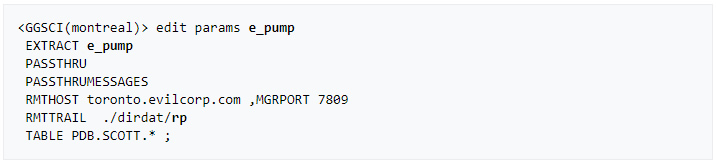

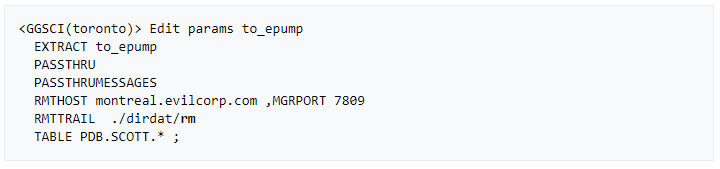

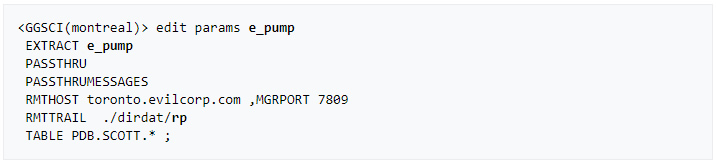

» Create a SECONDARY EXTRACT DATA PUMP (recommended).

This is an optional component for our primary extract helping to move the trail over the network.

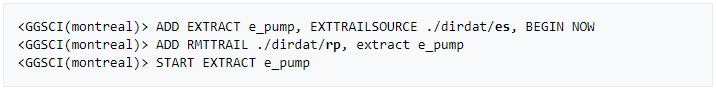

» Create and start a data pump extract

2. On the Target system (TORONTO)

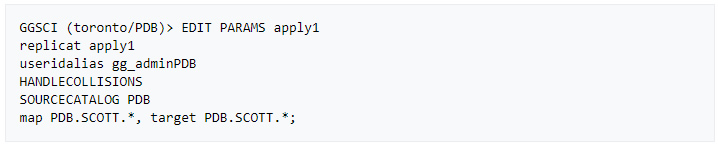

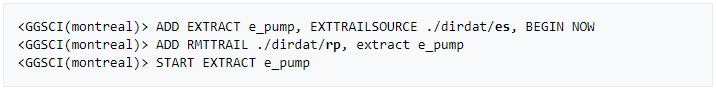

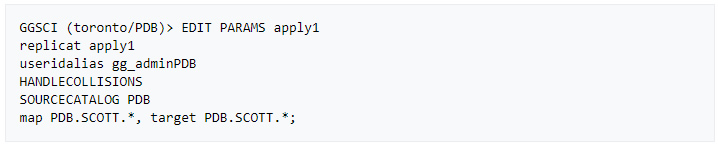

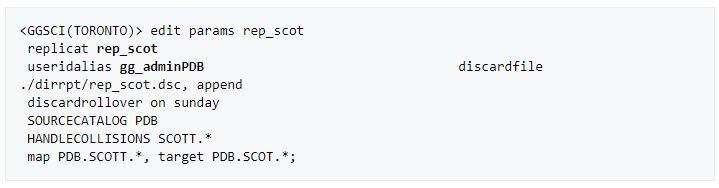

» Create a Replicat parameter file

Note: No need to create a checkpoint table for the integrated replicat unless Data Guard is configured

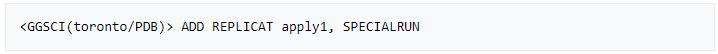

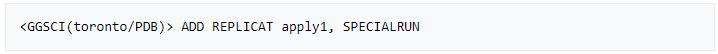

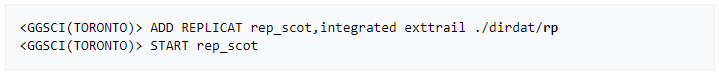

» Add and start the integrated replicat

– Remote trail prefix should be the same as specified in the data pump parameter file (rp)

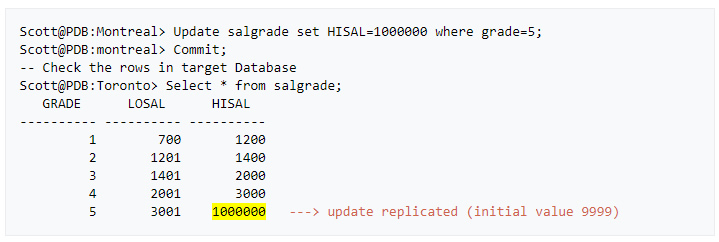

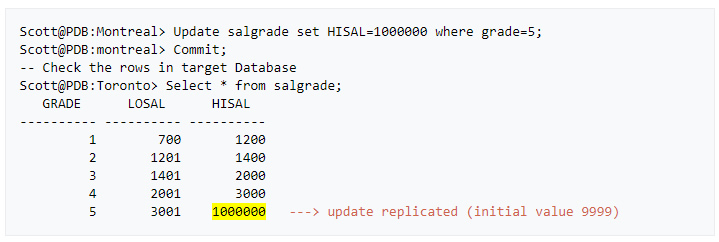

» We can now verify that the data is replicated to the target by performing a little update on the source database

IV. BI-Directional Replication (active-active)

When implementing a bidirectional configuration, you must consider the following areas to avoid data integrity issues:

• Loop detection ( ping pong data behaviour)

• Conflict detection and resolution (CDR) when the same column is modified at the same time on both systems

• Oracle Triggers (Replicat data triggering DML operations on the target system)

Golden Gate 12c already handles the above issues as

- EXCLUDETAG parameter ignores replicat redo stream during the extraction (No Loops)

- LOGALLSUPCOLS parameter supports CDR

- Integrated Replicat doesn’t require disabling dml triggers on the target system.

A- Configuration

As Extract and Replicat processes will be on both servers, I will refer to Montreal as a target and Toronto as a source.

1. On the source system (TORONTO)

» Add supplemental log data for update operations

» Configure OGG User alias and schema logging (root level)

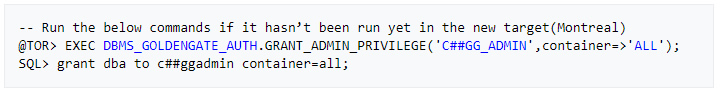

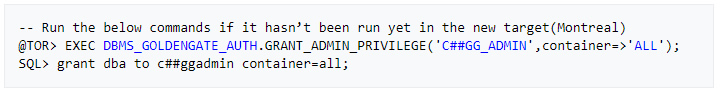

» Verify that the right source privileges are granted to GoldenGate admin user

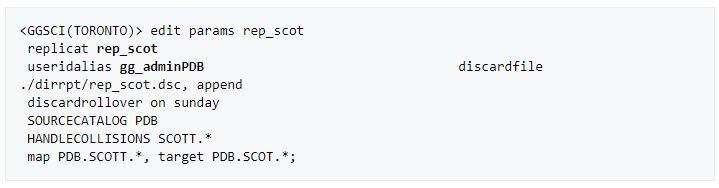

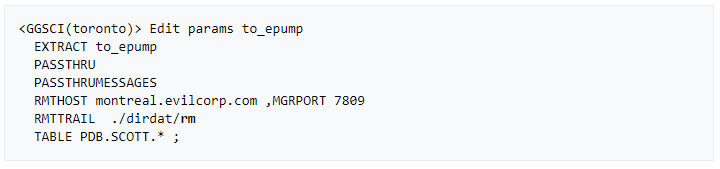

» Create an Integrated Primary extract

» Add and register the integrated extract with the container database

» Create a trail for the Extract group and start it

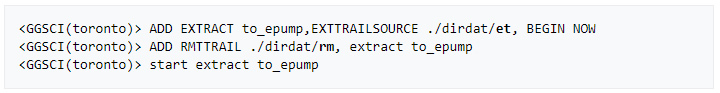

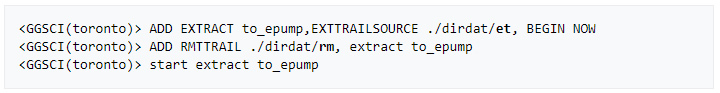

» Create a SECONDARY EXTRACT DATA PUMP

Optional but why not :).

» Create and start a data pump extract

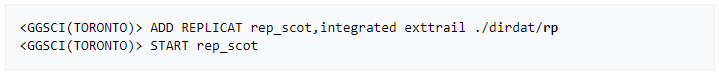

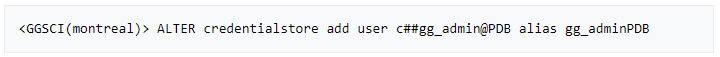

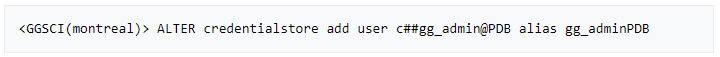

2. On the Target system (MONTREAL)

» Verify that the right source privileges are granted to GoldenGate admin user

» Create an OGG admin User (PDB level)

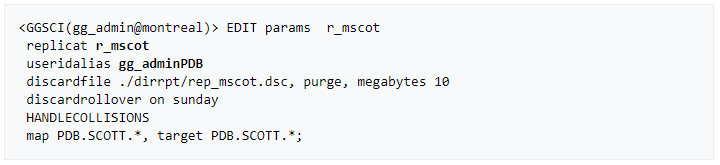

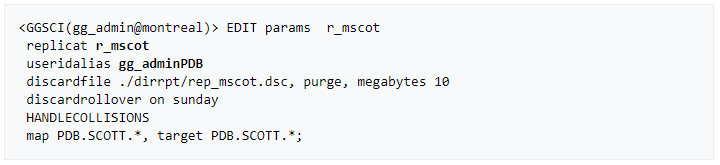

» Create a Replicat parameter file

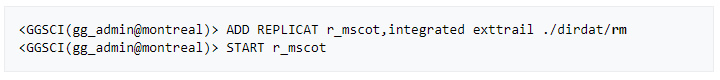

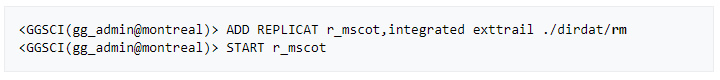

» Add and start the integrated replicat

– Remote trail prefix should be the same as specified in the data pump parameter file (rm)

B- Test & Monitoring

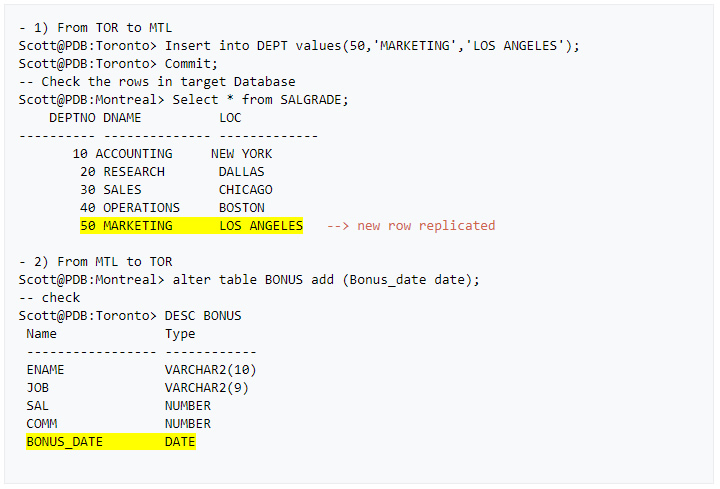

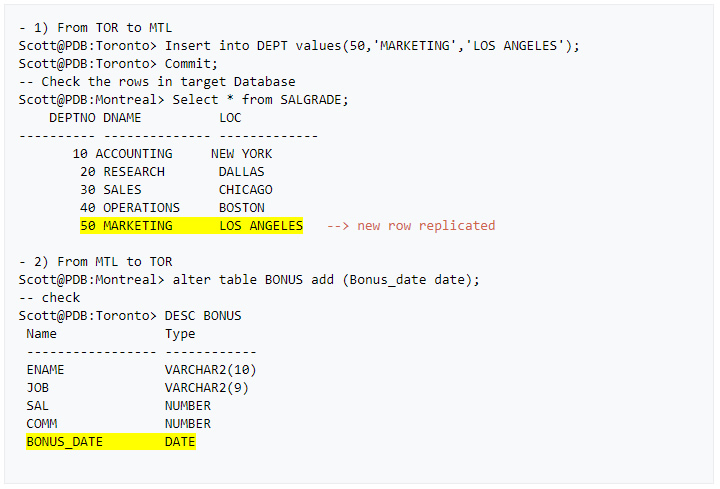

» Let’s verify if data is now replicated in both directions via an insert on the news source and a DDL on target PDB

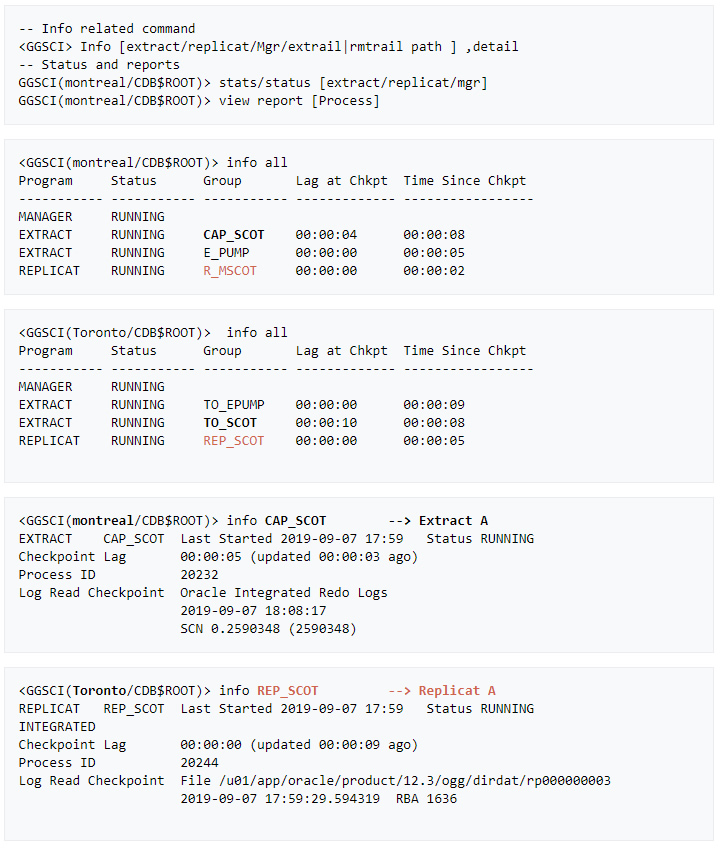

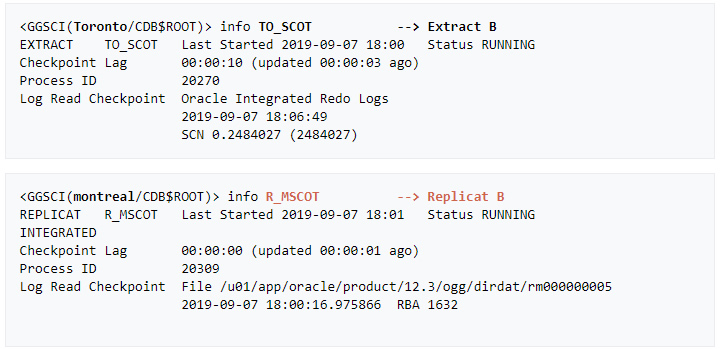

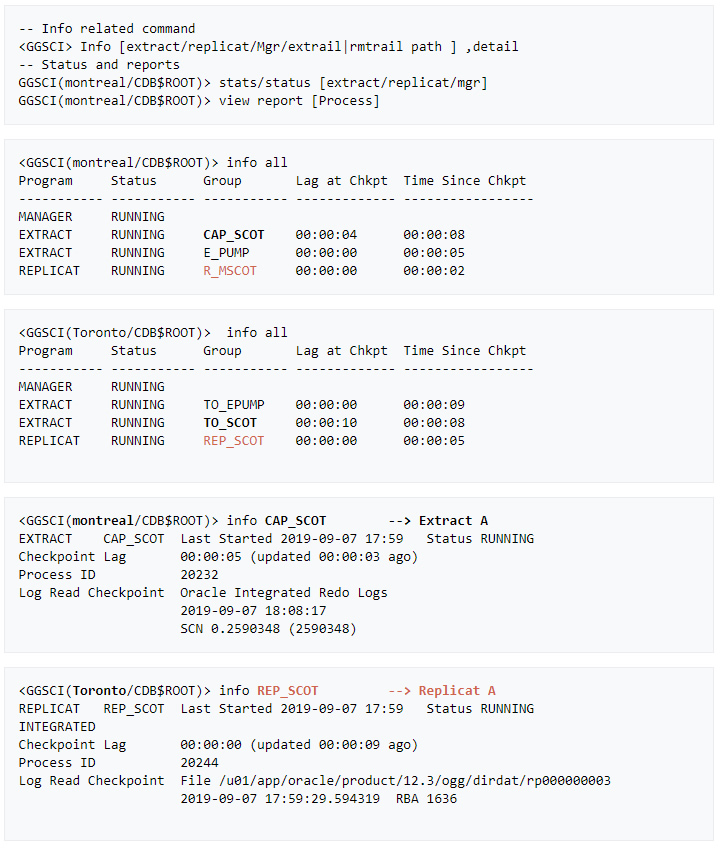

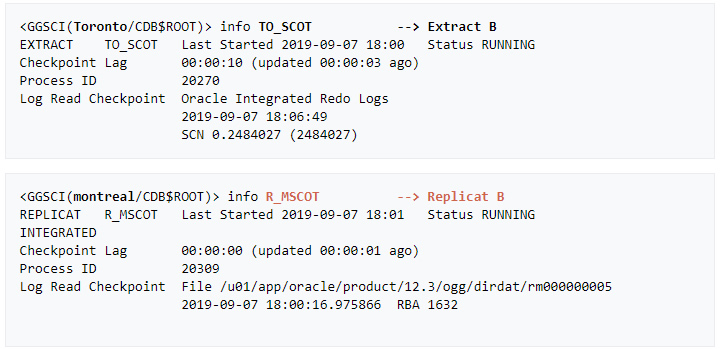

» Last but not least: Here are few GGSCI commands that help monitor the status of the replication processes.

More from this Author

Intro First, before anyone starts sending me print screens about their successful AutoUpgrade, or flexing about how their 100+ databases were smoothly migrated to PDBs over the weekend, I am stopping you right there. This is my honest feedback on the experience I had when trying the tool on a windows environment. It is obviously not... Read More

Intro I have been asked to read about Oracle database fleet management at work but the turnoff was that it relied entirely on OEM. Aggregating both functionalities makes provisioning, in my opinion, cumbersome and unattractive for shops that only want to manage the fleet. Luckily for me, I got acquainted with an even better framework that’s dedicated... Read More